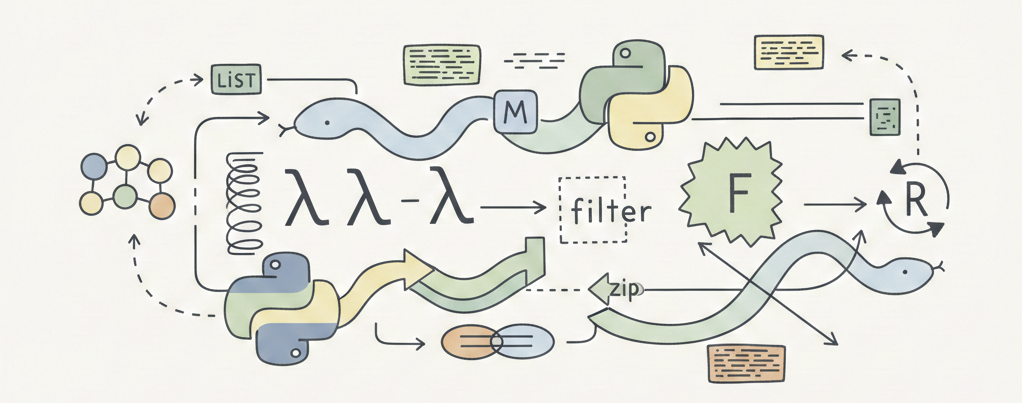

Choosing Functional Python (Without Forcing It)

Why this matters

Python gives you multiple ways to solve the same problem. Loops, comprehensions, generators, map, filter, reduce, any, sorted, and more.

For a long time, I knew these tools existed, but I didn’t really know when or why to use them. I could read functional-style code, but writing it confidently was another story.

This document is not a tutorial on functional programming in Python. It’s a set of notes, patterns, and mental models that helped things finally click for me.

What I mean by Functional Programming in Python (FPP)

Python is not a purely functional language, and trying to force purity usually makes code worse.

When I say FPP here, I mean using a functional style where it makes the code clearer.

In practice, that usually looks like:

- Expressing logic as transformations instead of step-by-step instructions.

- Minimizing mutation when it improves readability.

- Leaning on higher‑order functions when they describe intent better than loops.

The goal is not purity. The goal is clarity.

When to use FPP (and when not to)

The most important rule I learned:

Use functional style when your problem is about transforming data, not managing evolving state.

When FPP works well

FPP shines when the problem is primarily about transforming data.

Typical cases include:

- Mapping values from one form to another.

- Filtering collections based on conditions.

- Aggregation like

sum,max,min,any, orall. - Sorting with clear, composable rules.

- Counting or grouping values.

- Building lazy pipelines with generators.

When FPP is a bad fit

Functional style starts to break down when the solution depends on evolving state or control flow.

This usually shows up in problems that:

- Require early exits.

- Depend on remembering what has been seen so far.

- Involve search or traversal logic.

In these cases, a simple loop is often the most readable solution. Trying to force functional style here often makes the code harder to read, not better.

Patterns that unlocked things for me

Pattern 1: Existence checks using any

Many problems boil down to a simple question: does something exist?

Instead of writing loops that return early, it’s often clearer to express that intent directly:

from math import sqrt

n = 17

# Returns True because 17 has no divisors other than 1 and itself

has_no_divisors = not any(n % i == 0 for i in range(2, int(sqrt(n)) + 1))

This reads almost like English: there does not exist a divisor. Once I started framing problems this way, a lot of validation and checking logic became easier to reason about.

Pattern 2: Tuple keys in sorted

Sorting often involves more than one rule. Python’s tuple comparison makes this surprisingly clean.

students = [

{"name": "Alice", "score": 90, "age": 20},

{"name": "Bob", "score": 90, "age": 19},

{"name": "Charlie", "score": 80, "age": 21}

]

# Sort by score (descending), then by age (ascending)

sorted_students = sorted(students, key=lambda s: (-s["score"], s["age"]))

Here, the intent is encoded directly in the key:

- Score in descending order (negated).

- Age in ascending order.

Negating values tends to be clearer than mixing reverse=True with complex logic.

Pattern 3: reduce is for building structure

reduce works best when you are accumulating something — a dictionary, a count, or a combined result.

from functools import reduce

s = "abracadabra"

counts = reduce(

lambda acc, c: {**acc, c: acc.get(c, 0) + 1},

s,

{}

)

# Result: {'a': 5, 'b': 2, 'r': 2, 'c': 1, 'd': 1}

Note: While this demonstrates the pattern, for simple counting, collections.Counter is usually preferred in production code.

Where reduce tends to fall apart is control flow. If a solution feels hard to read, that’s often a sign the problem wants a loop instead.

A quick before → after example

One pattern that helped anchor all of this for me was rewriting a small loop and asking a simple question: is this transformation or state management?

Before: imperative loop

from math import sqrt

def is_prime(n):

if n < 2:

return False

for i in range(2, int(sqrt(n)) + 1):

if n % i == 0:

return False

return True

This works, but the intent is buried inside the loop.

After: functional style

def is_prime(n):

if n < 2:

return False

return not any(n % i == 0 for i in range(2, int(sqrt(n)) + 1))

Here, the logic reads closer to the actual rule: a prime has no divisors. No explicit looping is required.

Generators: the mental model that helped

My confusion with generators wasn’t about syntax. It was about mixing up producers and consumers.

A key realization:

A

forloop doesn’t care aboutyield. It only cares whether an object is iterable.

def gen_one():

# Generator expression (lazy)

return (i for i in range(5))

def gen_two():

# Explicit generator delegation

yield from range(5)

Both return generator objects. The difference is who controls the iteration. yield from allows you to delegate part of the generation process to another iterable explicitly.

Filtering vs conditional mapping

These two look similar but mean very different things.

Filtering: Keep only the even numbers.

n = 10

evens = (i for i in range(n) if i % 2 == 0)

Conditional mapping: Keep all numbers, but change the odd ones to 0.

# if / else BEFORE the 'for' transforms

transformed = (i if i % 2 == 0 else 0 for i in range(n))

Rule of thumb:

ifafter theforfilters.if / elsebefore thefortransforms.

zip: aligning data instead of indexing

zip became much clearer to me once I stopped thinking about indices and started thinking about alignment.

names = ["amar", "john", "alice"]

scores = [90, 80, 85]

aligned = list(zip(names, scores))

# Result: [('amar', 90), ('john', 80), ('alice', 85)]

This expresses the idea that these two collections belong together, position by position.

A simple mental shortcut I use:

maptransforms values.zipaligns values.

Unzipping (and the iterator gotcha)

pairs = [("a", 1), ("b", 2), ("c", 3)]

letters, numbers = zip(*pairs)

One important detail: zip returns an iterator. If you consume it once, it’s exhausted. If you need to use the data multiple times, convert it to a list first.

functools.partial: pre-filling intent

I found partial useful once I stopped seeing it as an optimization and started seeing it as a way to name behavior.

from functools import partial

def multiply(x, factor):

return x * factor

# Create a new function that always multiplies by 2

double = partial(multiply, factor=2)

print(double(10)) # 20

Instead of repeating the same lambda everywhere, partial makes the intent explicit and reusable.

A closing thought

Functional-style Python didn’t click for me by memorizing APIs or forcing myself to avoid loops. It clicked when I started asking a simpler question: what kind of problem is this?

If the problem is about transforming data, functional tools often make the intent clearer. If it’s about managing state or control flow, a loop is usually the right choice.

That distinction alone has helped me write Python that is easier to read, easier to reason about, and easier to change later.

Lastly, thank you for reading this post. For more awesome posts, you can explore my other articles here, and follow me on Github — amarlearning.

#python #functional-programming #clean-code #software-design #programming-patterns #pythonic